What is Data Cleaning

Data that is up to scratch and always ready for analysis is clean data. Clean data helps in making important business decisions. Data cleaning, also known as data cleansing/scrubbing is a very important step if you want to create a culture around quality decision making.

Data cleaning is the process of eliminating incorrect, corrupted, incorrectly formatted, duplicate or incomplete data within the dataset. When a researcher or data handler will combine data from multiple sources there is a chance for duplicate data or mislabeling. Naturally, when data is incorrect, outcome is unreliable

5 characteristics of quality data

- Validity. The degree to which your data conforms to defined business rules or constraints.

- Accuracy. Ensure your data is close to the true values.

- Completeness. The degree to which all required data is known.

- Consistency. Ensure your data is consistent within the same dataset and/or across multiple data sets.

- Uniformity. The degree to which the data is specified using the same unit of measure.

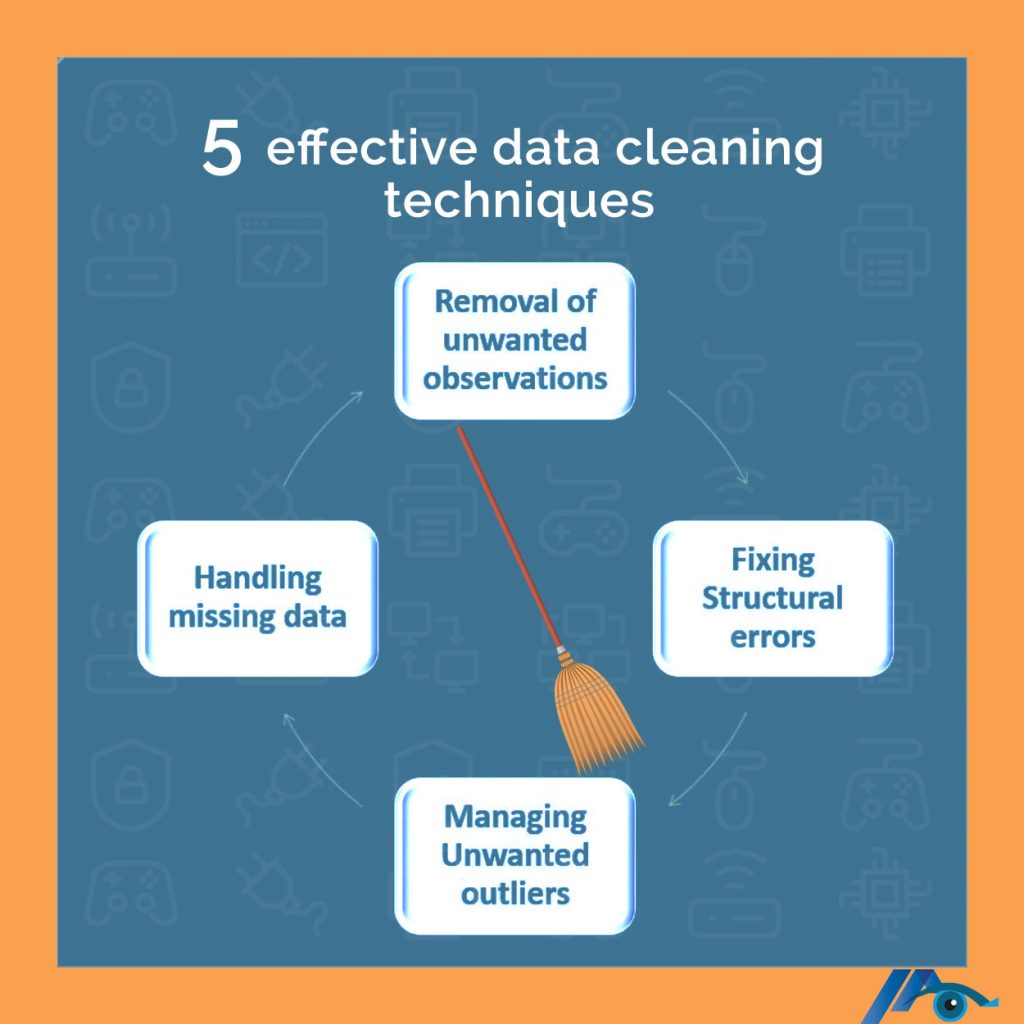

How is data cleaning done?

It depends on the type of data that is being stored by the company. A basic framework for data cleaning is as follow:

Step 1: Remove duplicate or useless data entry

The first step is to remove useless observations. This is the most often mistake that happens during data collection. When data is being combined from multiple sources, or data is being received from clients or numerous departments, opportunity is created for data to be duplicated. Data analysis softwares have the option where repetitive data can be detected. Irrelevant data is when you are trying to study a certain age group, for e.g. teenagers, and data of 40-50 year old’s is present in your data. This irrelevant data is going to distort your results. Removing irrelevant information makes analysis more efficient and minimize distraction from your primary objective, as well as creating a more manageable and performant dataset.

Step 2: Fixing structural errors

These are errors occur when a researcher is transferring data and notices typos, incorrect capitalizations etc. These inconsistencies can cause mislabeled categories. Not Applicable or N/A can appear, which is an inconvenience.

Step 3: Filtering unwanted outliers

Majority of the times, researchers face an issue where there will be a one-off observation that does not appear to be fit in the data. Once reason could be there was improper data entry. If that is the case, this is legitimate reason to remove the entry. Doing so helps the performance of the data we are working with. But just because an outlier exists, doesn’t always mean it is incorrect, sometimes it is just what you’re looking for to prove a theory true or untrue.

Step 4: Handling missing data

Algorithms nowadays are very sophisticated and do not accept missing data. There are three universal ways to deal with missing values.

- Simply remove the observation that have missing values. But also consider that removing the value means removing information. Evaluate its importance before removing it.

- Fill in the missing value by looking at other observations. You can assume an average. You are losing the integrity of the results because you assumed a value.

- Lastly, you can later the way the data is used to effectively navigate null values.

Step 5: Validation and Q & A

To validate your data, there are some questions you’ll need to answer.

- Is the data making any sense?

- Does the data follow the appropriate rules for its field?

- Is the data proving your theory to be true or untrue?

- Can you develop trends in the data to help you form your next theory?

- Is there a quality issue with your data?

Since technology is so much involved in data analysis, developing algorithms etc the cleaner the data the accurate the results. With accurate results your business strategy and decision making will be beneficial to you. Dirty data can lead to false conclusions. False conclusions can lead to an embarrassing situation with top management. Hence it is important to create a culture of clean and quality data.